When you deploy the vCenter Server Appliance there is a few things that you should verify first. Proper Name resolution is a must so ensure that the DNS in your environment is properly configured and that you have created an A or host record for your vCenter Server Appliance and a reverse lookup or PTR record.

The installation of the vCenter Server Appliance is a little unorthodox as you actually need to exit the initial configuration wizard to properly install vCenter Single Sing On (SSO).

vCenter Single Sign On is a part of VMware Cloud Suite. Although it can be installed separately it is an integrated feature starting with vCenter 5.1. For additional information on SSO please refer to VMware’s vCenter Single Sign On FAQ.

The reason you need to exit the configuration wizard is that SSO is largely based on tokens and certificates and therefore the hostname of the vCenter Server Appliance (vCSA) must be properly set and verified before SSO is initialized.

Deploying the appliance from OVF is a matter of importing it. Let’s look at the steps once you have imported it and it is booted properly.

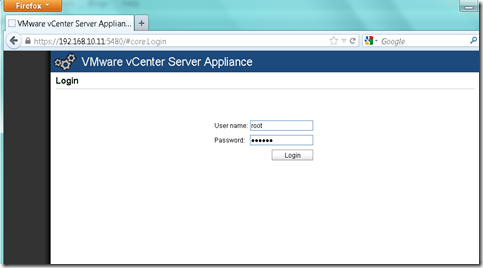

The vCSA will use DHCP by default. To begin the configuration browse to the assigned IP and specify the proper port 5480 (i.e. http://192.168.10.220:5480) as shown in figure 1.01

figure 1.10

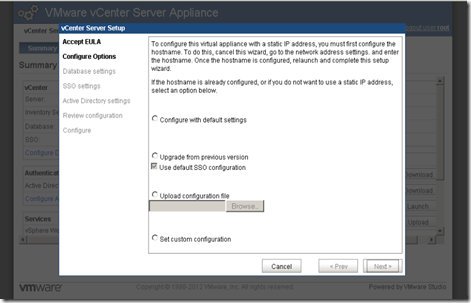

The default login is root with the password “vmware”. The wizard runs and prompts you to accept the license agreement; accept it and click Next. On the Configure Options page you are prompted to cancel the wizard and properly configure the hostname and static IP address first before proceeding as shown in figure 1.11.

figure 1.11

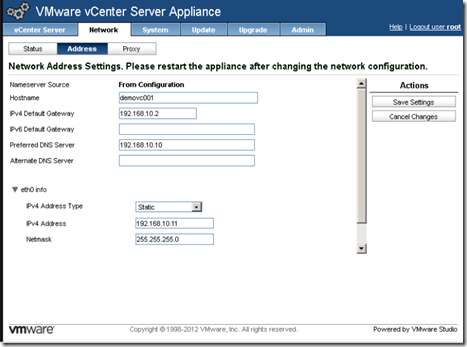

After you cancel the wizard, select the network tab and configure the hostname and network properties including the static IP as shown in figure 1.12.

The one thing I have noticed is that the hostname does not always apply properly through the graphical user interface. As I mentioned however; it is important that the hostname is properly applied before SSO is configured and initiated.

figure 1.12

The other item that seems to throw errors if not first completed on the command line is joining the appliance to the domain.

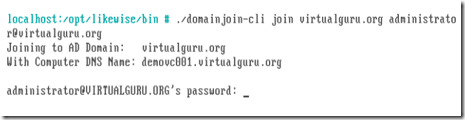

You can join the vCSA and configure the hostname from the command line. The process is similar to adding a vMA (vSphere Management Assistant) virtual machine to the domain and uses the same domainjoin-cli command.

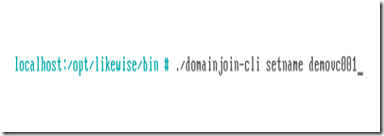

The domainjoin-cli command is included in the vCSA and can be found in the /opt/likewise/bin directory.

SSH is enabled by default for the root account so you can either use the console or SSH to the vCSA. The default password for the root account is “vmware” unless changed. Using the domainjoin-cli you can update the hostname using the command domainjoin-cli setname [hostname] as shown in figure 1.13.

figure 1.13

After the hostname is set you can use domainjoin-cli once again to join the vCSA to the domain using the command domainjoin-cli join [domain name] [administrator@domain] as shown in figure 1.14. You will be prompted for the password to complete the process.

figure 1.14

Once you have completed these two commands successfully you can log back into to the GUI and complete the wizard to setup the database and configure SSO. The wizard also enables the Active Directory however now it should complete with no errors.

When you login to the GUI you will need to rerun the Setup Wizard from under the Utilities pane by clicking the Launch button.

Under the Configure Options select the “Set custom configuration” and click Next.

If you are using the native SQL database select embedded (otherwise put in your specific database settings) from the Database type dropdown and click Next.

If you are running the SSO on the vCSA select embedded from SSO deployment type dropdown and click Next.

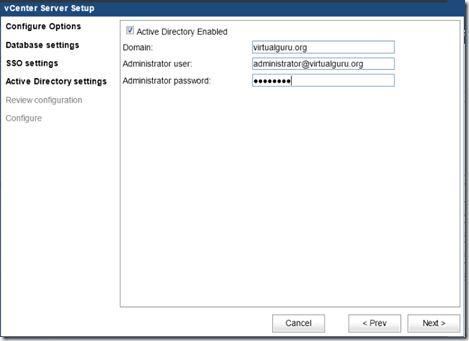

On the Active Directory Settings page, select the Active Directory Enabled and put in your Domain, Administrator User and Administrator password and click Next as shown in the figure 1.15.

figure 1.15

Review the configuration and click “Start” to begin the configuration. Ensure everything installs, configures and starts correctly.

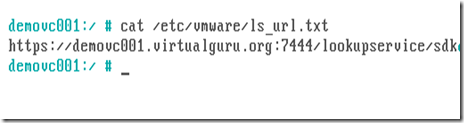

The setup automatically installs SSO with a random password. As it is randomly generated you cannot install the vSphere Web Client in another location as the installation requests SSO Administrator name and password and Lookup service URL.

You can verify the SSO Lookup service URL through the command line by running the following command cat /etc/vmware/ls_url.txt as shown in figure 1.16.

figure 1.16

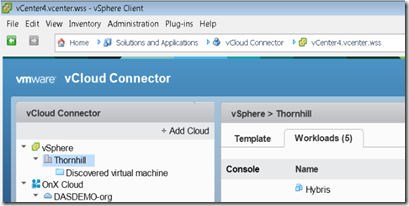

As we are going to look at the VMware vSphere Web Client in the next post I will leave the configuration and details till then.